Generative AI in geospatial: A Pyramid of Increasing Complexity

Florent Gravin

Large Language Models LLMs like GPT have emerged as game-changers in the way we interact with technology, automate processes and generate content. They brought numerous ideas about how this revolution could be applied within geospatial applications. However, the true potential of these models lies not just in their out-of-the-box-capabilities, but in more complex ways in which they can be leveraged to meet specific challenges.

Mastering generative AI involves a progression from simple interactions to more complex and customized applications. We propose representing this path as a pyramid of knowledge, illustrating the increasing complexity of the systems involved in solving your location intelligence issues, and supporting your decision making process.

1. Basic Interaction (Chat with GPT)

The first level of interaction, and probably the most common use of LLMs, is utilizing an existing chat service that relies on a sophisticated Large Language Model (LLM), such as ChatGPT.

Workers have integrated such services into their daily routines to translate text, summarize documents or generate content.

For analyzing geospatial data, you can use GPT Data Analyst and upload any CSV or GeoJSON file. The LLM reads your data and answers your questions about its content. It can generate text or even create charts, by performing calculations based on your different entries.

Use Case: know more about geOrchestra instances

Download the GeoJSON file on GeoBretagne platform.

Ask some questions about the dataset:

- How many geOrchestra instances do we have ?

- Where are they ?

Those interactions are quite basic, and become very limited if you want to obtain a more specific question, or if you want to integrate such capabilities into your own application.

2. Advanced Prompt Design (Prompt Engineering)

The next level of interaction involves crafting and refining prompts to produce specific outputs. This effort provides contextualization for the LLMs, allowing you to frame exactly what you expect it to answer and how. You can clarify your objective, the format of the output, or provide examples and other valuable information.

Typically, application designers define a prompt template based on the requirements, and the user query is then incorporated into that template. Your application is kind of a proxy that reformulates the user query into a more adapted prompt.

Use Case: Display OpenStreetMap data on a map, based on a Natural Language request.

Human request: Display all vegetarian restaurants in Basel

Here are the different aspects that the prompt template must handle:

Extracting Information: The LLM needs to extract what the user wants to display—in this case, vegetarian restaurants—and where, in Basel.

Generating an API Request: The LLM must generate an OverPass (a free online API to fetch OSM data) request based on these parameters. It should generate a bounding box (bbox) for the location and the correct OverPass filters for the type of locations expected.

Returning the API Request: The LLM must return only the API request without any additional text.

Providing Examples: You can give some examples in the prompt to help the LLM generate the correct URL.

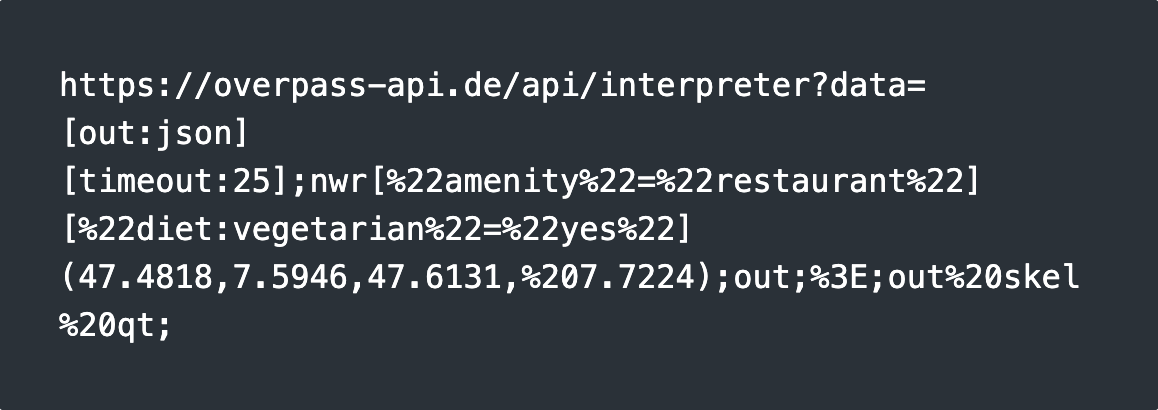

The expected output is:

This approach will only work if the LLM you rely on knows the OverPass API syntax, meaning it has been trained with OverPass specifications. This is typically the case with major models.

If you want to achieve the same results with your own data, which the model has not been trained on, you will need to advance to the next level by setting up a Retrieval-Augmented Generation (RAG) system.

3. Enhanced Contextual Responses (Retrieval-Augmented Generation)

Retrieval-Augmented Generation (RAG) is a technique for enhancing the accuracy and reliability of generative AI models by incorporating facts fetched from external sources. Since SaaS LLMs do not have access to your specific geospatial data, you need to add a retrieval phase to fetch your data and pass it as context to the LLM.

From the user request to the response, RAG typically involves several steps designed to improve the accuracy of the results. These steps often rely on interacting with a Language Model (LM), meaning that multiple requests to the LMs will be made throughout the process.

Typically, the following techniques are used to design and improve RAG systems:

- User Query: The user starts by asking a question or making a request.

- Query Reformulation: The AI might reformulate the question to ensure it’s understood correctly or to fit the format needed for further processing.

- Data Source Selection: Depending on the nature of the query, the AI determines which external data sources to use (e.g., databases, APIs).

- Data Retrieval: The system constructs a retrieval request to fetch relevant information—this might involve semantic searches, API calls, or other methods.

- Document Ranking: Retrieved documents or data are ranked by their relevance to the query.

- Contextual Integration: The most relevant data is added to the context, enriching the AI’s understanding before generating a response.

- Final Response Generation: The AI then generates a response, using both the user’s query and the retrieved data.

Use Case

Location Intelligence on Your Own Data –

When Will Garbage Be Collected in My Neighborhood, Paris 8ème?

Imagine you have a dataset that includes garbage collection schedules for Paris, but the LLM hasn’t been trained on this data. Here’s how RAG can help:

- Data Indexing: First, your data needs to be indexed in a Vector Database to enable fast and accurate retrieval.

- Query extraction: The RAG system identifies the key components of your query—what you’re asking for (garbage collection times), the dataset that contains this information, and the location (Paris 8ème).

- Geocoding: The system retrieves the geographic geometry for Paris 8ème using a geocoding service.

- Semantic search: The system performs a semantic search to find the relevant dataset, such as the garbage collection schedule. The search just returns the metadata.

- Dataset Retrieval: From metadata information, the RAG fetches the datasets features.

- Spatial Intersection: The system fetches the geospatial features (e.g., boundaries of Paris 8ème) and performs a spatial intersection with the dataset to find the specific schedule for your neighborhood.

- Final Answer: The relevant information is passed to the LLM, which interprets the data and provides you with the specific garbage collection time.

4. Custom Model Development (Fine-Tuning Models)

All previous techniques can be implemented by relying on existing LM services (GPT, Claude, Mistral, etc.). These services provide LLMs, embedding models, and other dedicated models that can be used alongside the final LLM call throughout your RAG process. However, these services are not free and will never be trained on your specific data. Moving beyond RAG to model fine-tuning allows you to build models that are much more deeply personalized to your data and use cases. With fine-tuning, you can take a general-purpose model and train it on your own specific geospatial data.

Use Cases:

Train an LLM on Your Geospatial Features:

You can fine-tune a model on your geospatial datasets so that the model can directly answer your questions without needing to retrieve any information. However, RAG is generally preferred for this use case because fine-tuning and hosting an LLM can be very complex and costly.Train an Embedding Model on Your Metadata (or Data):

In the RAG process, the retrieval phase based on semantic search is critical. In our use case, finding the correct dataset that matches the user request is essential for the answer to be relevant. Since semantic search relies on Vector Data generated by embedding models, and embedding models are relatively small, it's feasible to fine-tune them and train them on the structure of your geospatial metadata records, specifically on your metadata catalog.Train a Text-to-SQL Model to Generate PostGIS SQL:

Many models can transform text into SQL queries if they know the structure of your dataset (to use the correct columns for the query). We could train a model to build spatial queries, such as PostGIS SQL queries, to handle location intelligence by joining, aggregating, and computing geospatial indicators. We could also imagine other models like text-to-OSM, text-to-OGC-API, and so on, which can help your RAG interact directly with open geo standards.

As we can see, there are many ways to integrate generative AI and LLMs within your geospatial applications. By understanding and leveraging each level of this pyramid, organizations can unlock the full potential of generative AI, moving from general-purpose solutions to highly customized applications that drive innovation and efficiency.

Career

Interested in working in an inspiring environment and joining our motivated and multicultural teams?